It’s the moment you have been waiting for. The easy button to deliver Kubernetes on vSphere is here. With all the policy, standards and governance you have come to expect from vRealize Automation.

Pre-Reqs

- An installation of vRealize Automation 7 – Enterprise Edition

- A reservation with a static IP pool.

- A CentOS 7 or RHEL 7 blueprint with vRA Agent installed and tested. See my guide here.

- The Kuberenetes Blueprint code downloaded from here.

- vRealize Cloud Client 4 downloaded and installed from here.

- vRA Icon Pack downloaded from here

- A quite place where you will not be interrupted

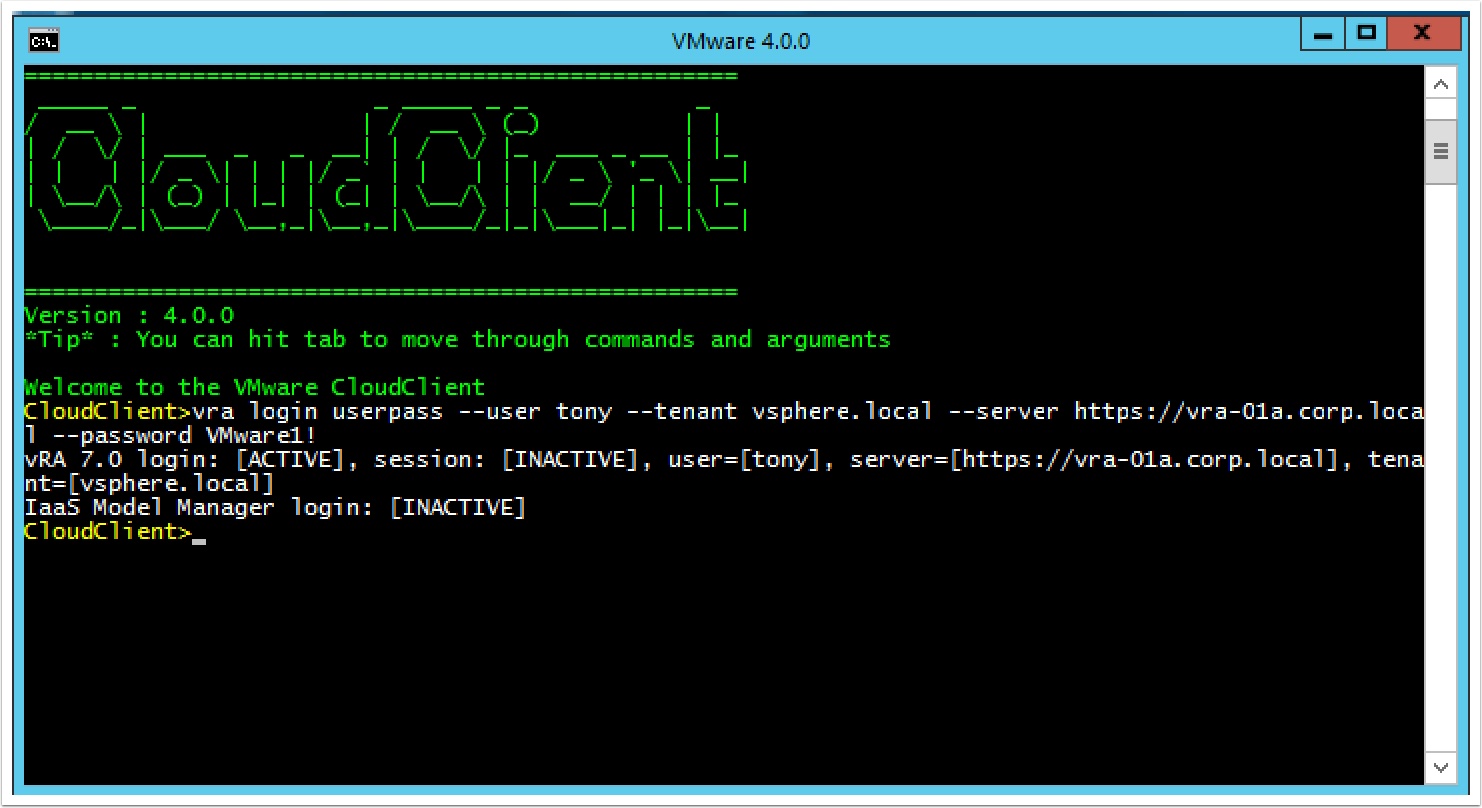

First, let’s upload the blueprint using cloud client.

Login to cloud client as cloud admin. You will need the Infrastructure Architect and Software Architect Roles

vra login userpass --user tony --tenant vsphere.local --server https://vra-01a.corp.local --password VMware1!

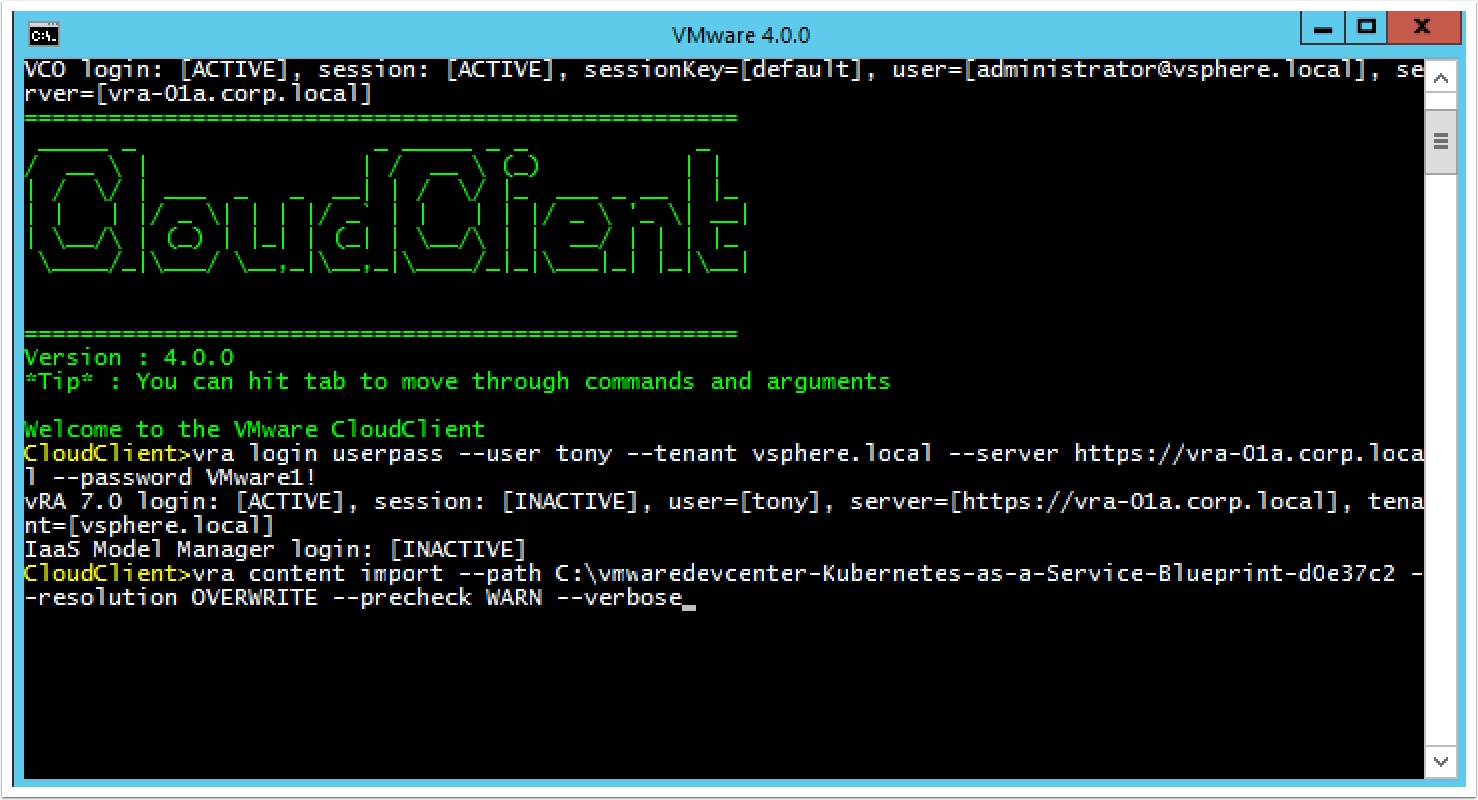

Now import the blueprint you downloaded, make sure you specify the correct path and file name.

vra content import --path C:\Kubernetes-composite-blueprint.zip --resolution OVERWRITE --precheck WARN --verbose

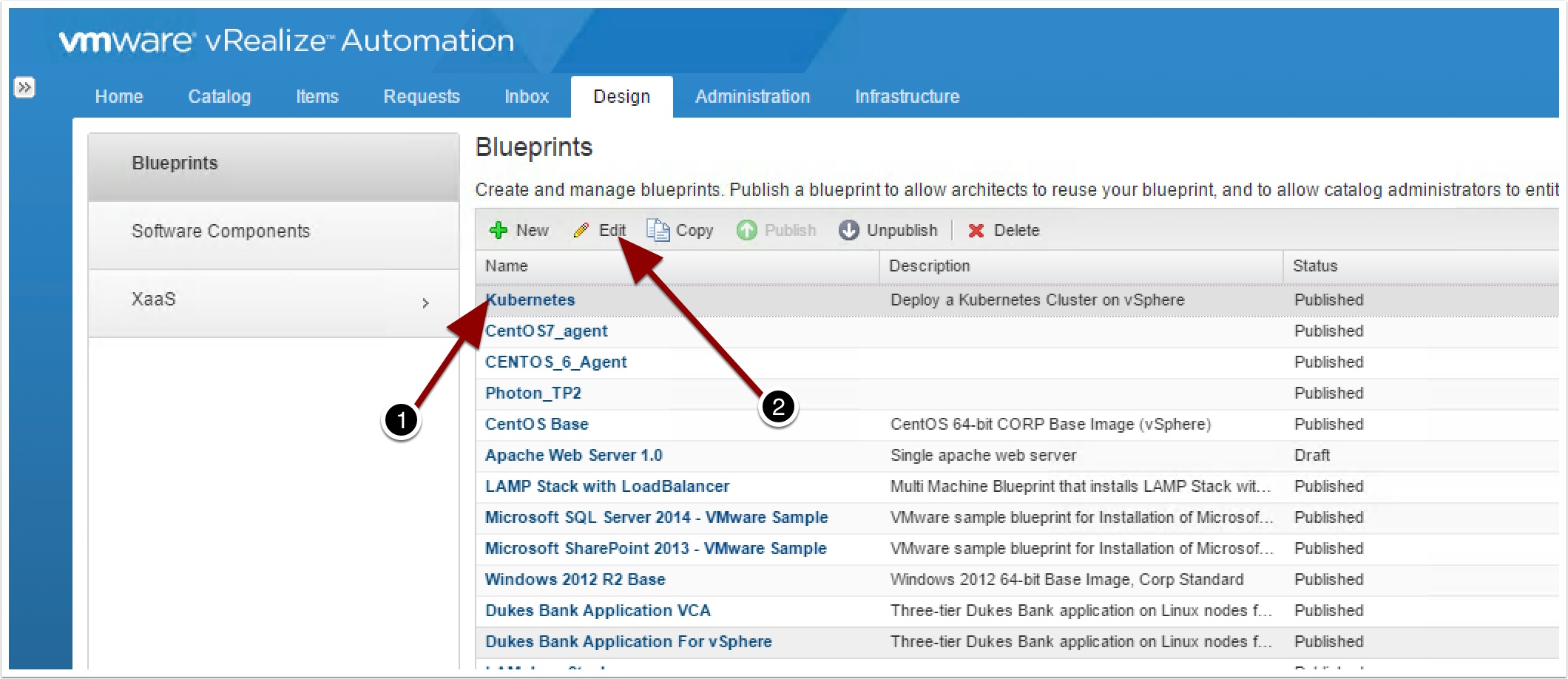

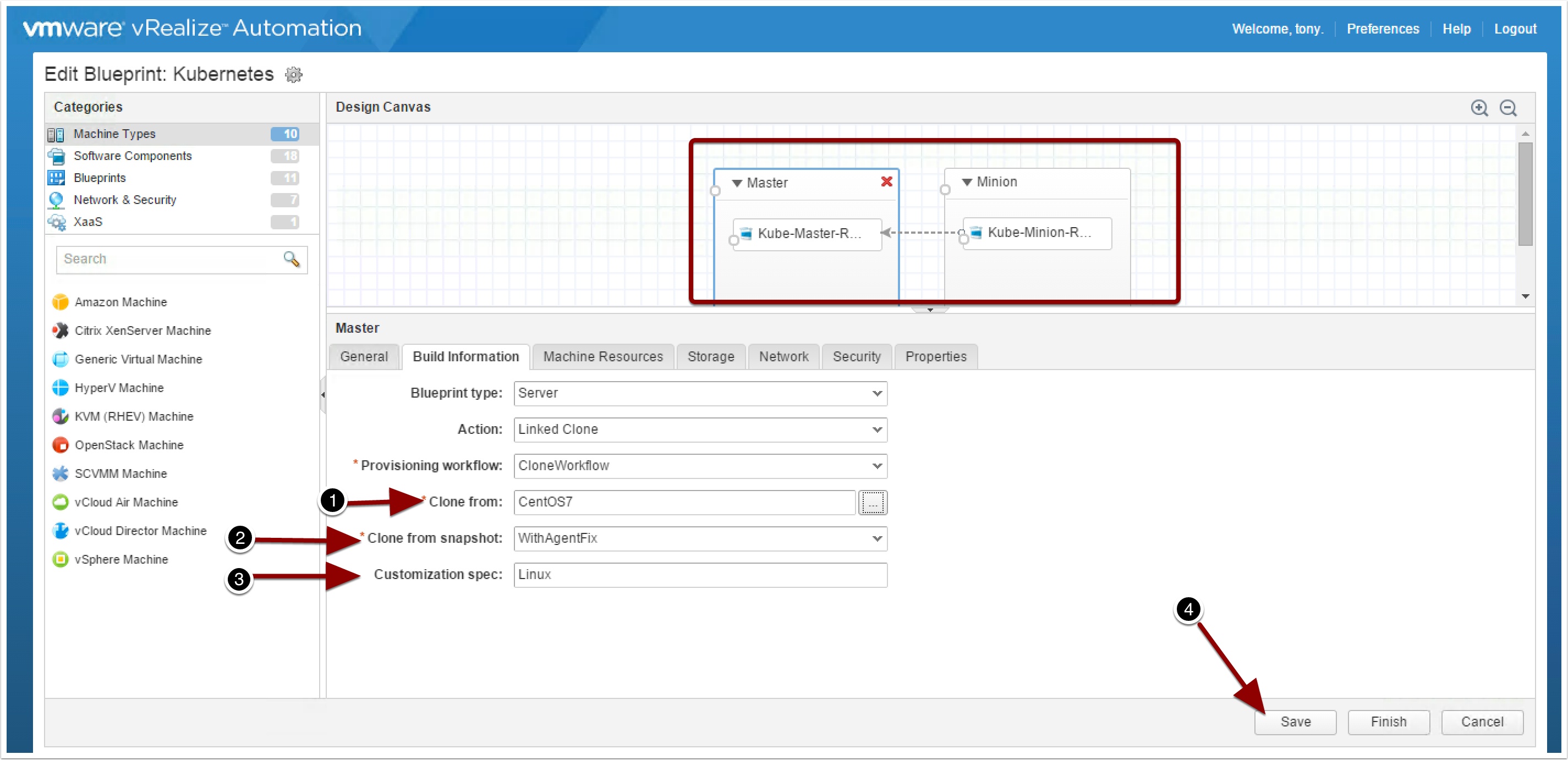

You will need to update the Master and Minion to point to your template, snapshot (if using Linked Clones) and your customization Spec.

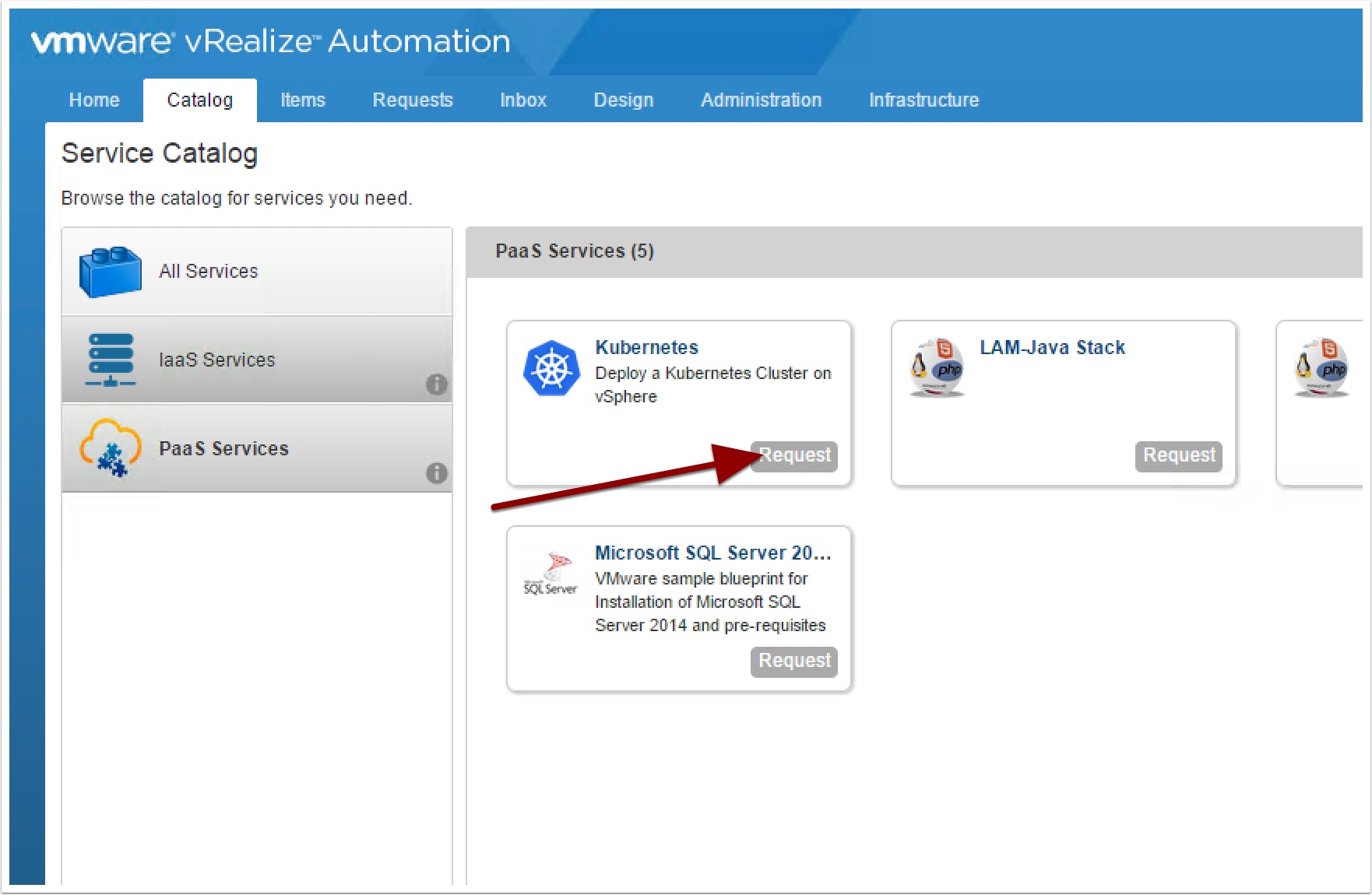

Now go to the Administration tab, Catalog Management, Catalog Items and change the icon and entitle it to a service.

Note, you will find the Kubernetes Icon in the icon pack.

Pingback: KubeWeekly: Issue #18 – KubeWeekly

Pingback: How to import vRA7 Blueprints as code – VMtoCloud.com

Pingback: How to configure The Kubernetes Blueprint to Scale out with vRA 7.1 – VMtoCloud.com

Pingback: Containers for the vSphere Admin: After School Special Update – VMtoCloud.com

Hi Ryan, we are using VRA 7.2 & 3 advanced in our datacentres. I am looking trough this article and i cant seem to se at what point you would need the enterprise VRA from the screen shots you have put up.

we are very keen to do this sort of thing but we are just in the experimenting stage at the moment.

Great article though.

Thanks

Hi Ryan,

great article, congrats!

I´ll have the same question: Why do we need the Enterprise Edition of vRA? At which step is Enterprise needed?

Thanks JO!

In this blueprint I am using Software Components, they are only available in the the Enterprise Version.

Thanks

Vmware came back with the following

Kubernetes blueprint is an XAAS (software authoring) feature, which falls under enterprise.

I have noticed you don’t monetize your blog, don’t waste

your traffic, you can earn additional cash every month because you’ve got hi quality content.

If you want to know how to make extra money, search for: Mertiso’s tips best adsense alternative

Hi!

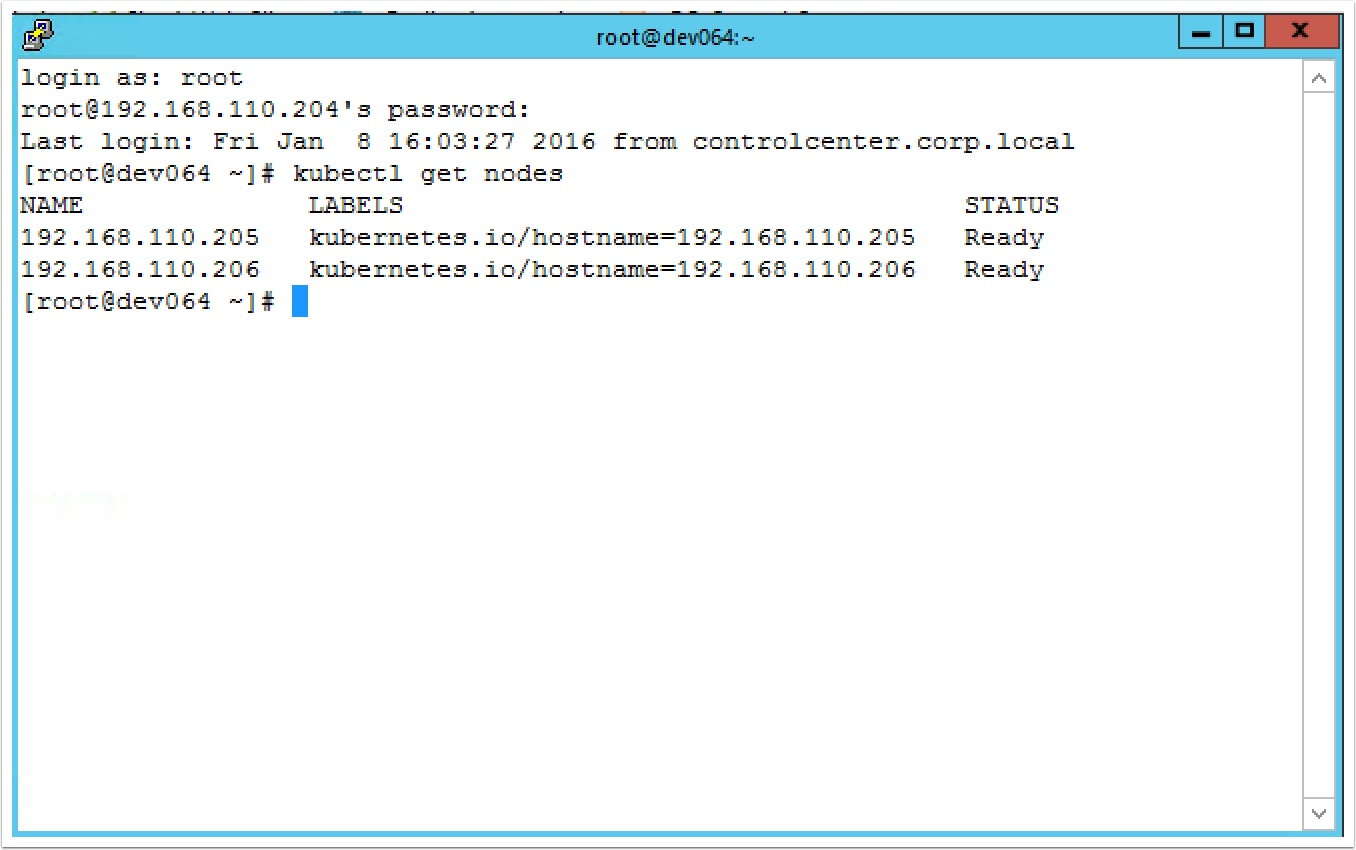

I’ve encountered an error. On the master node I run “kubectl get nodes” and I get “No resources found”. I then ran “journalctl -u kubelet” on both, the master and minion. No entries on the master, but the minion says:

kminion-002 systemd[1]: Dependency failed for Kubernetes Kubelet Server.

I then went to see the logs on the vRA request, and found an issue on the install and start logs:

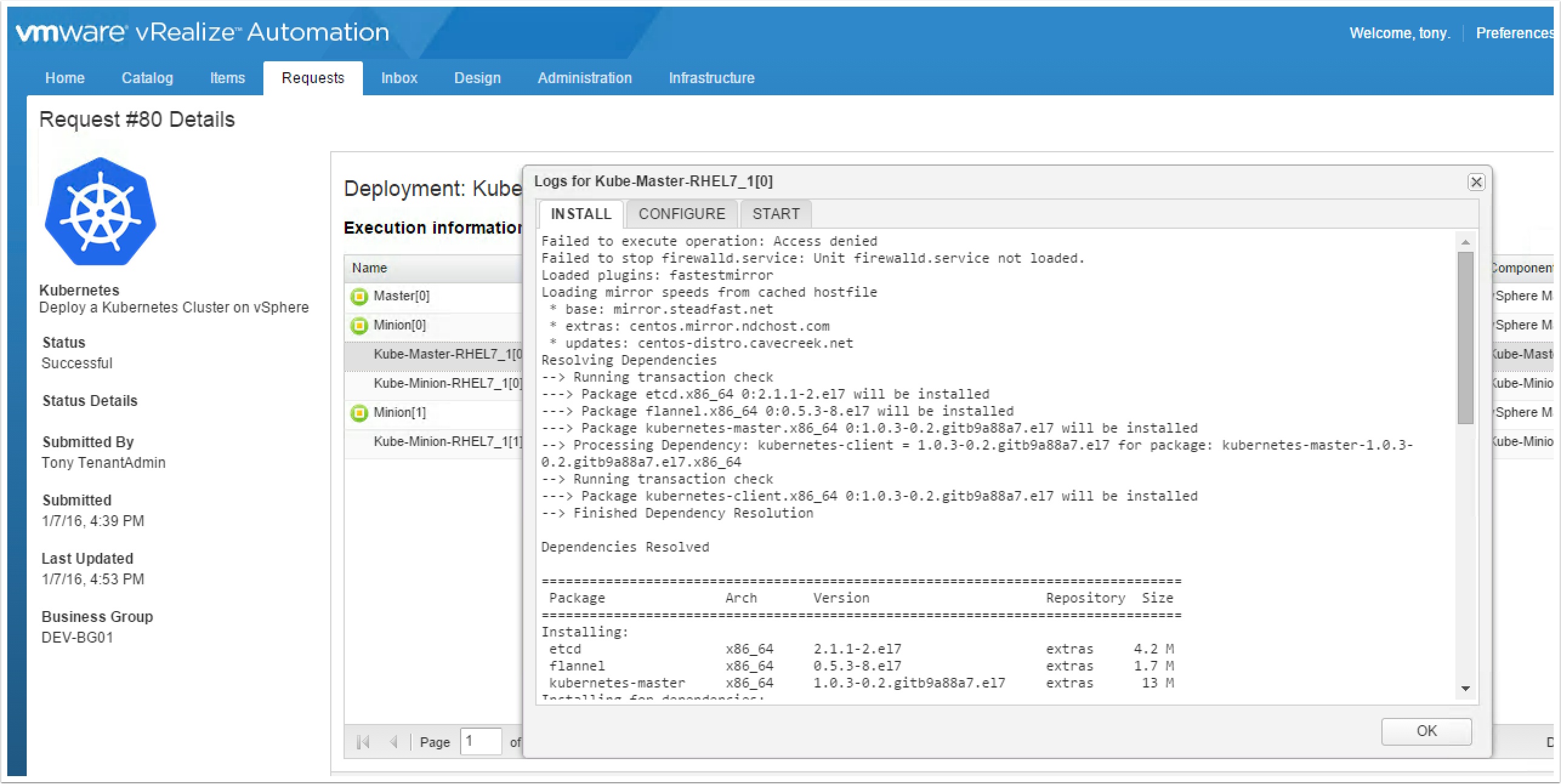

Install logs for Kube-Master:

Complete!

Generating RSA private key, 2048 bit long modulus

………………….+++

………………………………..+++

unable to write ‘random state’

e is 65537 (0x10001)

And Start logs for Kube-Master:

controllermanager.go:558] Failed to start certificate controller: open /etc/kubernetes/ca/ca.pem: no such file or directory

The Kube-Minion install logs look OK, but on Start logs:

A dependency job for docker.service failed. See ‘journalctl -xe’ for details.

Job for flanneld.service failed because a timeout was exceeded. See “systemctl status flanneld.service” and “journalctl -xe” for details.

systemctl and journalctl gives:

kminion-002 flanneld-start[12570]: E0311 00:14:34.436765 12570 network.go:102] failed to retrieve network config: client: etcd cluster is unavailable or misconfigured; error #0: dial tcp 127.0.0.1:2379: getsockopt: connection refused

I’m running CentOS 7 minimal install, on vSphere 6.5 and vRA 7.3

Did you find any solution ?

Not exactly.

The errors are still there, but I found that the minions didn’t get the master IP. I can’t recall what I did to solve it, but look for the network configuration.

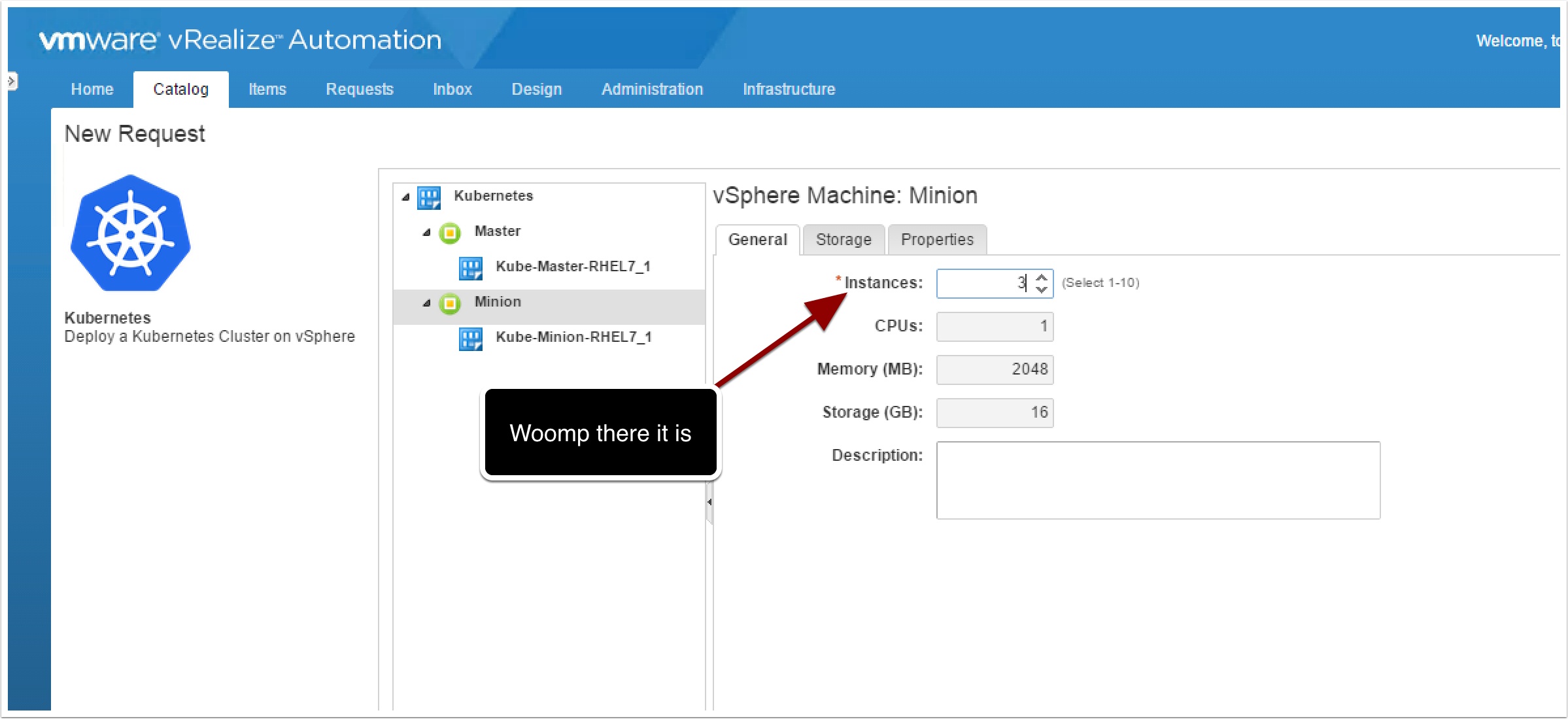

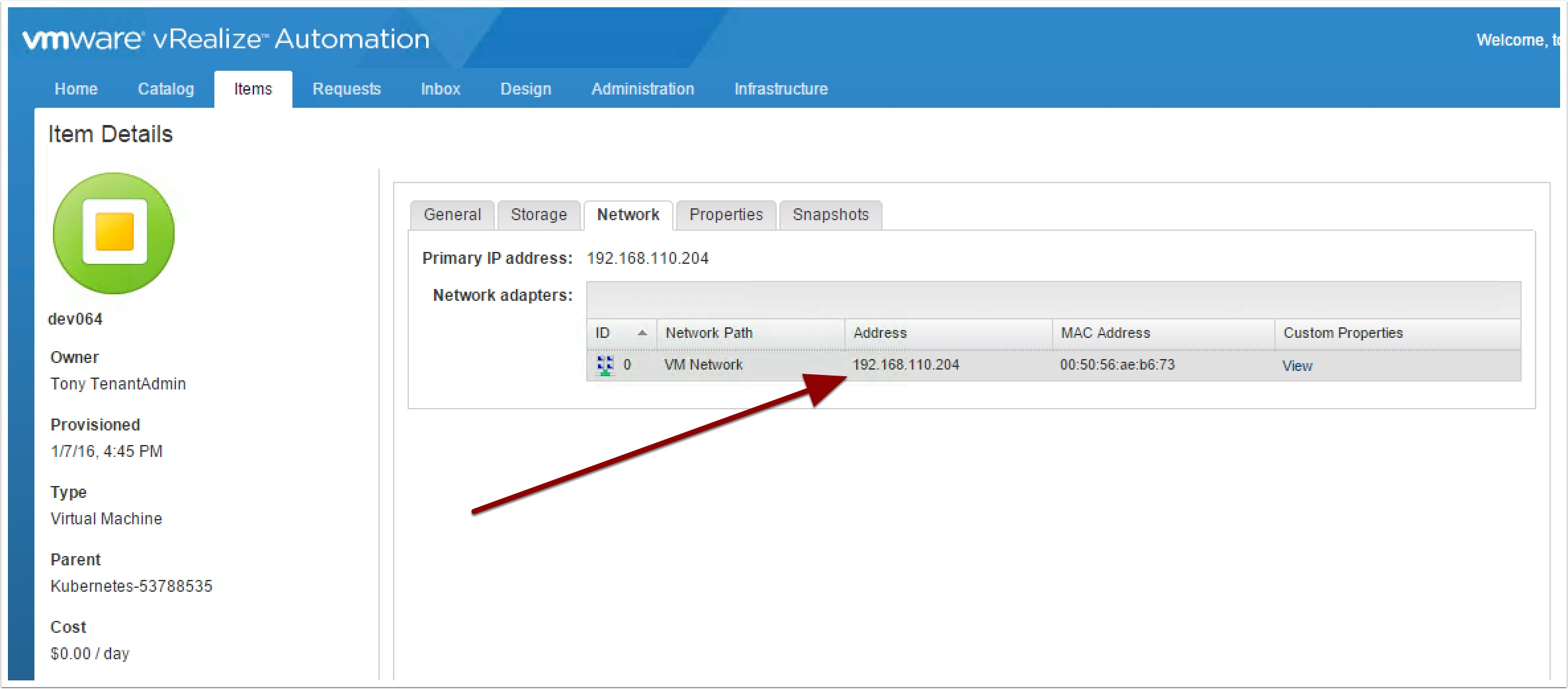

Nice blueprint ! One minor update is needed to the article though… After importing the blueprint, updating the VM Template and snapshot name, adding my network, one additional check was needed: it seems the Minion Kube-Minion-RHEL7_1 software component property “master_ip” was not set. My first deployment was successful, but there were nodes registered because they didn’t know their master_ip. After ticking the “Binding” box and adding the value _resource~Master~ip_address and saving, my next deployment successfully showed the nodes registered to the master.

My environment: vRA 7.4 GA, CentOS 7.5.4.1804 (Core)