Ever wonder what the difference is if you choose vDS or NSX-T backed networks when deploying workload management?

In this guide I will explain the differences so that you can make the best choice for your organization

In this guide I will explain the differences so that you can make the best choice for your organization

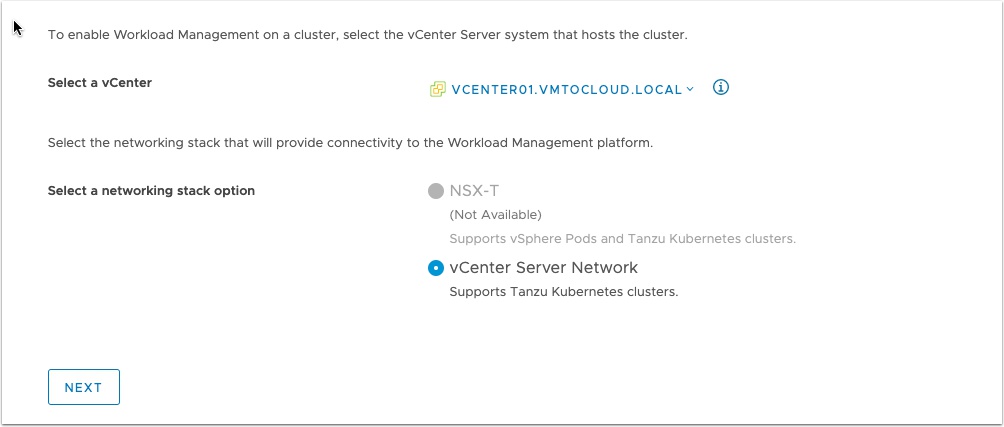

Let’s start with vDS backed

vDS backed does not require NSX-T to be deployed and configured, this can be a nice short cut but there are some draw backs that may not meet your requirements.

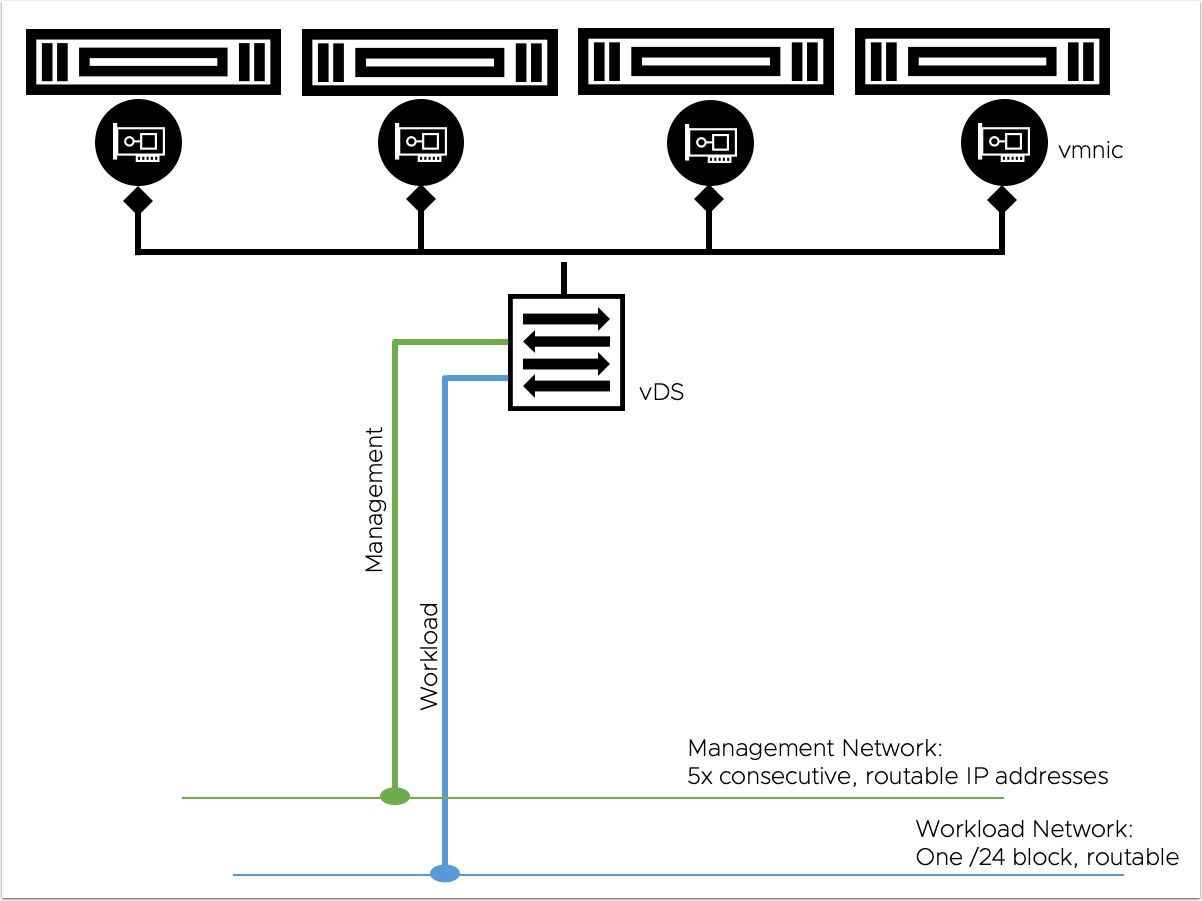

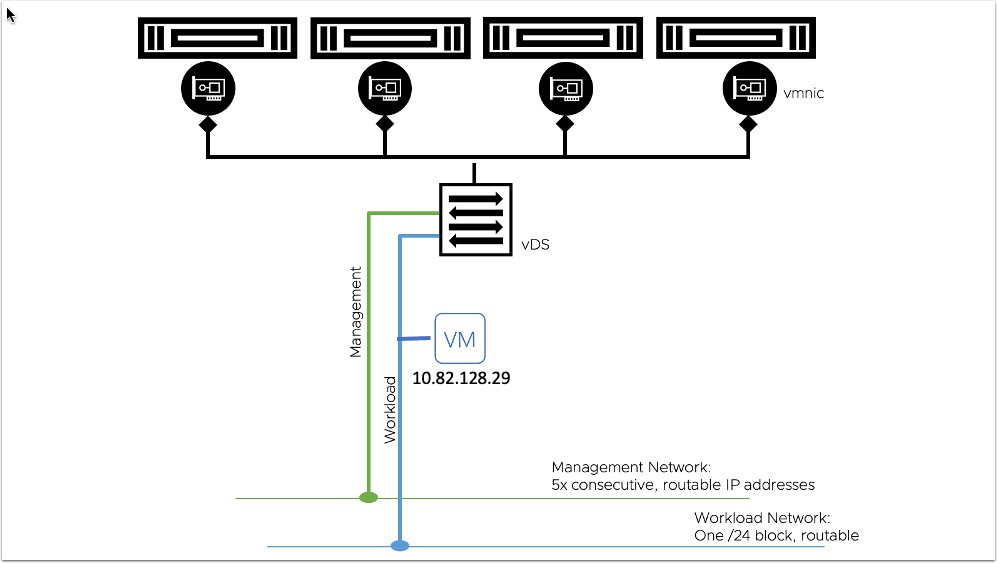

As you can see from the diagram above, vDS backed networks require a vLAN and they must be routable. So you are probably thinking, so what? I will just get a new vLAN from my network team. For proof of concept and small deployments this is ok but for larger deployments you may want to use private non routable networks that NSX-T backed networks can provide.

Now this may not matter to much for Tanzu Kubernetes Cluster containers as they run inside guest VM’s on private address space.

For VM Service vm’s this could lead to a scale and security concern as if you look at the diagram below they do drop right onto the routable network. In some cases this could also be exactly what you want. This would be similar to AWS EC2 legacy cloud where VM’s are not in a VPC.

Another drawback with vDS backed is you can not run containers directly on ESXi (vSphere Pods). You will also need to bring your own load balancer either HA-Proxy or NSX Advanced Load Balancer are supported.

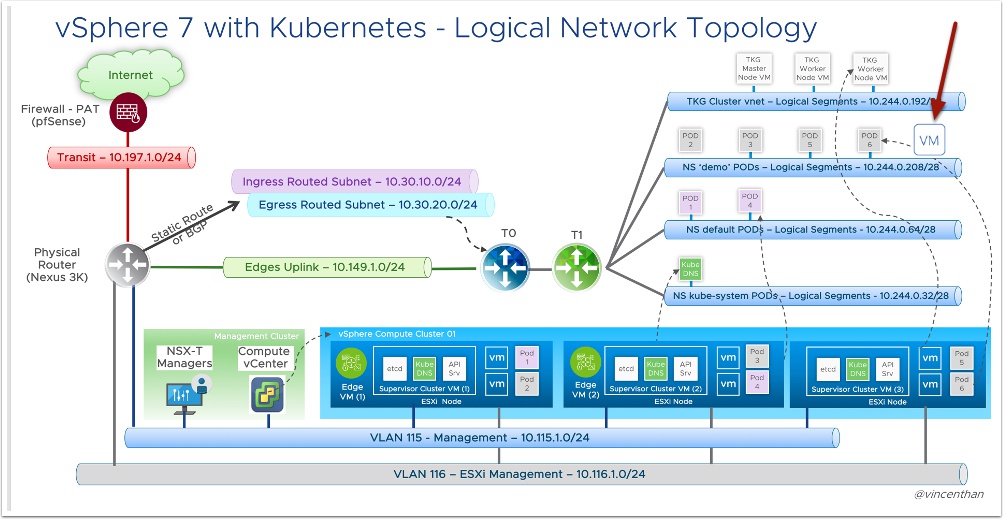

Now let’s take a look at NSX-T backed networks

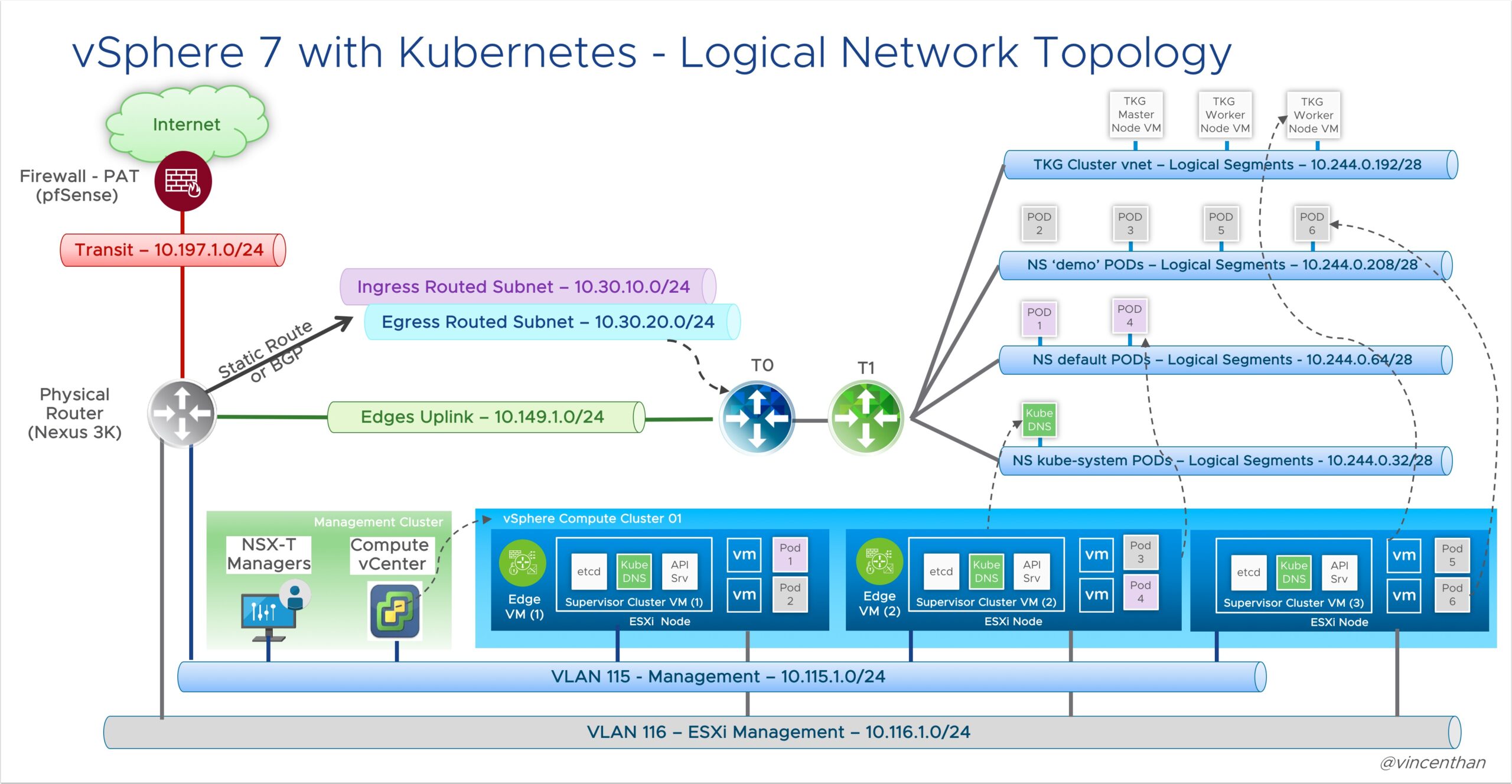

Don’t be confused by the diagram below, I know it’s a lot to take in. I will make it super easy. All we are doing it using a virtual router to create private networks on the fly. Many of you are already doing this if you have a home lab using physical switches and routers. You home wifi is even doing this. So think it it like a private network you just setup, you just bought a linksys router and connected a couple desktops to it and opened the firewall to allow internet access via your ISP.

Where this gets interesting is that now obviously you can run containers directly on the ESXi hosts because they can land on private address space. Also now you can scale exponentially because you are not gobbling up your limited vlan space. Where it really becomes necessary is if you plan to scale the VM Service as now those VM’s land on a private address and are not necessarily routable to the whole world unless you allow it. This is similar to AWS EC2 VPC’s

So advantages of NSX-T backed:

Ability to deploy containers directly to ESX-i

VM Service is highly scalable and more secure

Load Balancer built in to NSX-T

I hope this is helpful and if I am missing anything or need correction feel free to reach out.

vSphere with Tanzu using NSX-T network stack makes use NSX-T native load balancer. So for any future deployment using NSX-T will not be good idea till VMware lays out roadmap for AVI integration with NSX-T for LB Services

https://blogs.vmware.com/load-balancing/2022/06/22/native-nsx-load-balancing-is-going-away-time-to-migrate-to-avi-load-balancing/

You can use AVI with NSX-T for Tanzu for vSphere today https://docs.vmware.com/en/VMware-vSphere/7.0/vmware-vsphere-with-tanzu/GUID-8908FAD7-9343-491B-9F6B-45FA8893C8CC.html